The idea that Solid-State Drives (SSDs) have superior performance when compared to the Hard Disk Drives (HDDs) of yesteryear has become common amongst administrators. And, for many applications, SSDs have now proven themselves. Many administrators have not indulged beyond this concept. However, if you are building a machine with performance in mind, you might take a more critical look at that SSD.

This is exactly the rabbit hole I went down. I am currently working on my lab, setting up a virtualized environment consisting of a Windows Server 2022 Active Directory domain controller and eight Windows 11 workstations to mimic a small enterprise environment. This environment will serve as a small test bed for some scripting projects that I am currently working on. These scripts are performing batch jobs in an enterprise environment, and I will be using this lab environment for development and testing.

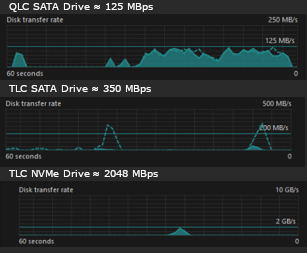

In setting up this lab, I made the assumption that my bottom-tier SSD would be more than adequate. After all, it far exceeds the performance of a spinning-disc dinosaur, right? Well, yes, but there are still ample improvements to be made. The first iteration was configured with the virtual disks located on a 2 TB Samsung QVO SATA drive. The Q in QVO is indicative of the type of flash chip used in the drive. In this case, QLC NAND technology is employed that allows for 4 bits per cell. This results in more voltage checks that have to take place per cell, and thus slower read/write times. When shutting down multiple VMs simultaneously, I noticed serious performance issues. As you can see in the chart below, I saw read/write throughput maxing out at about 125 MBps. I am expecting to grow this lab environment in the future, so I felt that if my hardware was being taxed on the first iteration, then it was inadequate.

The second iteration used a 2 TB Samsung EVO SATA drive instead. This drive employs TLC NAND flash technology. The cells in these chips have 3 bits per cell, and have half of the voltage states of QLC cells. This results in much faster read/write times and significantly higher throughput. In the chart below, I shut down and started all of my VMs at once to test this drive. I saw over double the throughput of the QVO drive, and instead of a sustained high-utilization, I observed short blips of approximately 350 MBps. Much better! This is what I was looking for! However, during my search to understand the cause of my performance concerns, I discovered NVMe drives.

There are very reasonably-priced NVMe drives that make SATA SSDs look absurdly slow. NVMe drives utilize PCI-E busses to achieve much higher throughput than SATA is capable of. In addition, many of these drives employ 3D NAND technology, which is yet another improvement. If your motherboard has the capability, these are very appealing for performance applications. And, at the present time, they’re really only slightly more expensive than SATA drives. As you can see in the chart below, the real-life performance I logged was almost 6-times faster at approximately 2048 MBps (2GBps)! Incredible!

Considering my specific use-case is to test the execution of batch jobs on systems that would normally have distributed resources, my host machine needs to be able to achieve performance levels that are reasonably comparable to the cumulative performance of a similar collective of distributed endpoints. Otherwise, such high throughput would not be warranted. But, for as reasonably-priced as these NVMe drives are compared to their SATA counterparts, I think they should be given a second look when applicable. I will be locating virtual hard-disks on the NVMe drive, and using the QVO drive to store backup files.